Hello, I am Carlota Parés-Morlans. I am a CS PhD student at Stanford University advised by Professor Jeannette Bohg in the IPRL lab. My research interests lie broadly in robotics, computer vision, and multi-modal sensing.

I recently graduated from Stanford University with a MS in Electrical Engineering supported by a “La Caixa” Fellowship. I received the Dean's Graduate Student Advisory Council Exceptional Master's Student Award, which recognizes students' academic excellence, intellectual achievement, and remarkable contribution to the Stanford community and beyond.

Previously, I graduated from Ramon Llull University with a BS in Computer Engineering and a BS in Networks and Telecommunications Engineering. During my time as an undergraduate, I worked with Professor David Miralles on multi-modal and cross-modal robotic perception.

Carlota Parés Morlans*, Claire Chen*, Yijia Weng, Michelle Yi, Yuying Huang, Nick Heppert, Linqi Zhou, Leonidas Guibas, and Jeannette Bohg.

arXiv preprint arXiv:2310.15928 (2023).

IROS 2024.

We introduce AO-Grasp, a grasp proposal method that generates stable and actionable 6 degree-of-freedom grasps for articulated objects. Our generated grasps enable robots to interact with articulated objects, such as opening and closing cabinets and appliances. Given a segmented partial point cloud of a single articulated object, AO-Grasp predicts the best grasp points on the object with a novel Actionable Grasp Point Predictor model and then finds corresponding grasp orientations for each point by leveraging a state-of-the-art rigid object grasping method. We train AO-Grasp on our new AO-Grasp Dataset, which contains 48K actionable parallel-jaw grasps on synthetic articulated objects. In simulation, AO-Grasp achieves higher grasp success rates than existing rigid object grasping and articulated object interaction baselines on both train and test categories. Additionally, we evaluate AO-Grasp on 120 real-world scenes of objects with varied geometries, articulation axes, and joint states, where AO-Grasp produces successful grasps on 67.5% of scenes, while the baseline only produces successful grasps on 33.3% of scenes.

@article{morlans2023grasp,

title={AO-Grasp: Articulated Object Grasp Generation},

author={Morlans, Carlota Par{\'e}s and Chen, Claire and Weng, Yijia and Yi, Michelle and Huang, Yuying and Heppert, Nick and Zhou, Linqi and Guibas, Leonidas and Bohg, Jeannette},

journal={arXiv preprint arXiv:2310.15928},

year={2023}

}

David Miralles, Guillem Garrofé, Carlota Parés, Alejandro González, Gerard Serra, Alberto Soto, Xavier Sevillano, Hans Op de Beeck, and Haemy Lee Masson

Scientific Reports 12, no. 1 (2022): 3772.

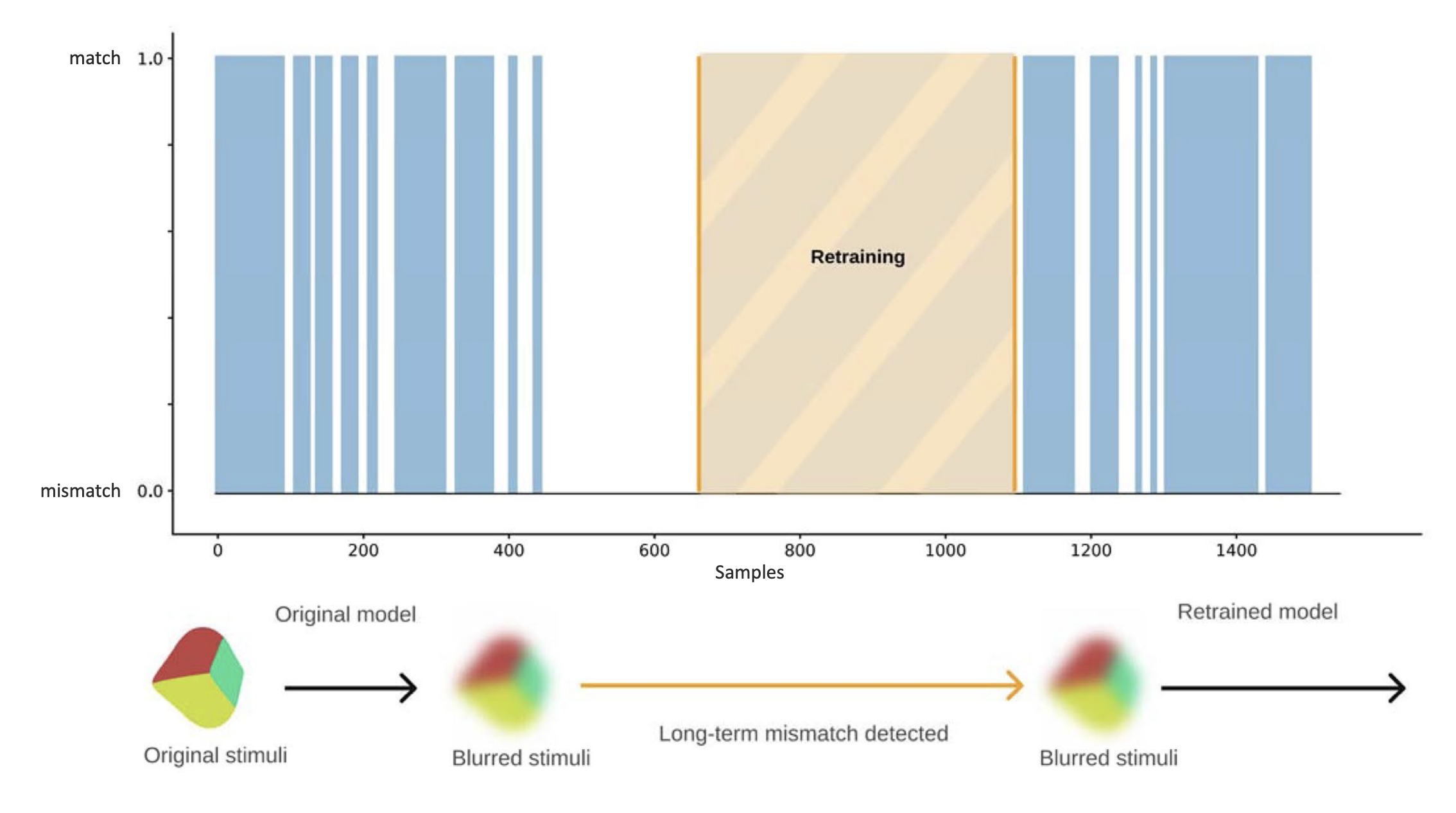

The cognitive connection between the senses of touch and vision is probably the best-known case of multimodality. Recent discoveries suggest that the mapping between both senses is learned rather than innate. This evidence opens the door to a dynamic multimodality that allows individuals to adaptively develop within their environment. By mimicking this aspect of human learning, we propose a new multimodal mechanism that allows artifcial cognitive systems (ACS) to quickly adapt to unforeseen perceptual anomalies generated by the environment or by the system itself. In this context, visual recognition systems have advanced remarkably in recent years thanks to the creation of large-scale datasets together with the advent of deep learning algorithms. However, this has not been the case for the haptic modality, where the lack of two-handed dexterous datasets has limited the ability of learning systems to process the tactile information of human object exploration. This data imbalance hinders the creation of synchronized datasets that would enable the development of multimodality in ACS during object exploration. In this work, we use a multimodal dataset recently generated from tactile sensors placed on a collection of objects that capture haptic data from human manipulation, together with the corresponding visual counterpart. Using this data, we create a multimodal learning transfer mechanism capable of both detecting sudden and permanent anomalies in the visual channel and maintaining visual object recognition performance by retraining the visual mode for a few minutes using haptic information. Our proposal for perceptual awareness and selfadaptation is of noteworthy relevance as can be applied by any system that satisfes two very generic conditions: it can classify each mode independently and is provided with a synchronized multimodal data set.

@article{miralles2022multi,

title={Multi-modal self-adaptation during object recognition in an artificial cognitive system},

author={Miralles, David and Garrof{\'e}, Guillem and Par{\'e}s, Carlota and Gonz{\'a}lez, Alejandro and Serra, Gerard and Soto, Alberto and Sevillano, Xavier and de Beeck, Hans Op and Masson, Haemy Lee},

journal={Scientific Reports},

volume={12},

number={1},

pages={3772},

year={2022},

publisher={Nature Publishing Group UK London}

}

Guillem Garrofé, Carlota Parés, Anna Gutiérrez, Conrado Ruiz, Gerard Serra, and David Miralles

Advances in Computer Graphics: 38th Computer Graphics International Conference, CGI 2021, Virtual Event, September 6-10, 2021, Proceedings 38. Springer International Publishing, 2021.

Haptic object recognition is widely used in various robotic manipulation tasks. Using the shape features obtained at either a local or global scale, robotic systems can identify objects solely by touch. Most of the existing work on haptic systems either utilizes a robotic arm with end-effectors to identify the shape of an object based on contact points, or uses a surface capable of recording pressure patterns. In this work, we introduce a novel haptic capture system based on the local curvature of an object. We present a haptic sensor system comprising of three aligned and equally spaced fingers that move towards the surface of an object at the same speed. When an object is touched, our system records the relative times between each contact sensor. Simulating our approach in a virtual environment, we show that this new local and low-dimensional geometrical feature can be effectively used for shape recognition. Even with 10 samples, our system achieves an accuracy of over 90% without using any sampling strategy or any associated spatial information.

@inproceedings{garrofe2021virtual,

title={Virtual haptic system for shape recognition based on local curvatures},

author={Garrof{\'e}, Guillem and Par{\'e}s, Carlota and Guti{\'e}rrez, Anna and Ruiz, Conrado and Serra, Gerard and Miralles, David},

booktitle={Advances in Computer Graphics: 38th Computer Graphics International Conference, CGI 2021, Virtual Event, September 6--10, 2021, Proceedings 38},

pages={41--53},

year={2021},

organization={Springer}

}

Joaquim Porte, Alan Briones, Josep Maria Maso, Carlota Pares, Agustin Zaballos, and Joan Lluis Pijoan.

EURASIP Journal on Wireless Communications and Networking, 2020(1), 1-27.

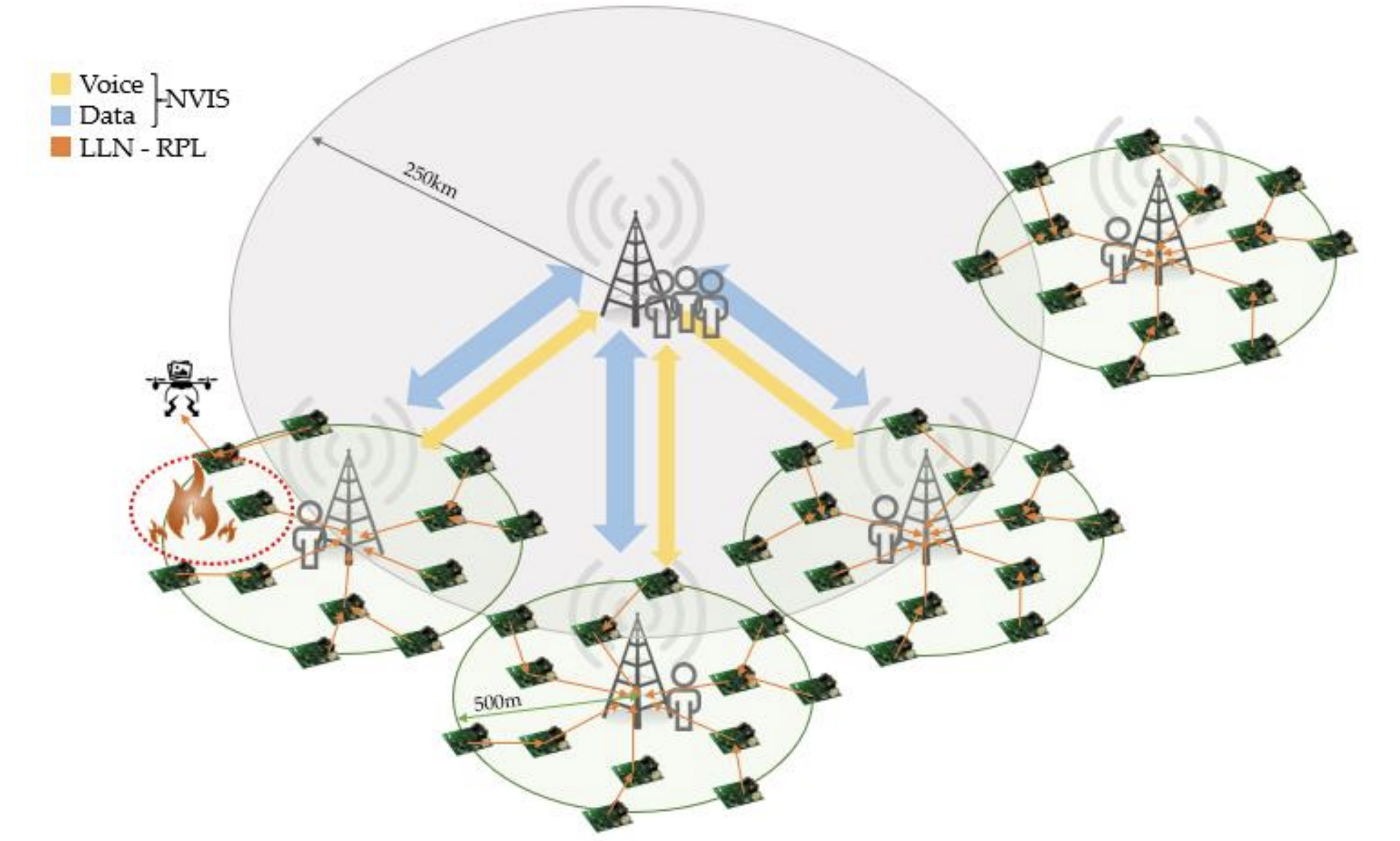

A heterogeneous sensor network offers an extremely effective means of communicating with the international community, first responders, and humanitarian assistance agencies as long as affected populations have access to the Internet during disasters. When communication networks fail in an emergency situation, a challenge emerges when emergency services try to communicate with each other. In such situations, field data can be collected from nearby sensors deploying a wireless sensor network and a delay-tolerant network over the region to monitor. When data has to be sent to the operations center without any telecommunication infrastructure available, HF, satellite, and high-altitude platforms are the unique options, being HF with Near Vertical Incidence Skywave the most cost-effective and easy-to-install solution. Sensed data in disaster situations could serve a wide range of interests and needs (scientific, technical, and operational information for decision-makers). The proposed monitorization architecture addresses the communication with the public during emergencies using movable and deployable resource unit technologies for sensing, exchanging, and distributing information for humanitarian organizations. The challenge is to show how sensed data and information management contribute to a more effective and timely response to improve the quality of life of the affected populations. Our proposal was tested under real emergency conditions in Europe and Antarctica.

@article{porte2020heterogeneous,

title={Heterogeneous wireless IoT architecture for natural disaster monitorization},

author={Porte, Joaquim and Briones, Alan and Maso, Josep Maria and Pares, Carlota and Zaballos, Agustin and Pijoan, Joan Lluis},

journal={EURASIP Journal on Wireless Communications and Networking},

volume={2020},

number={1},

pages={1--27},

year={2020},

publisher={SpringerOpen}

}